During my time as a system administrator, I would regularly analyze script performance in order to discover what items were impacting overall system performance. Our team would need to determine not only what to analyze, but how to do it. Using tools that the software supplier was familiar with helped to ensure everyone was speaking a common language. That way, any differences in interpretations would at least be based on common data points.

For Millennium®, script analysis includes determining how scripts for the application, custom queries, and reports impact the Oracle database. Millennium has tools that help to create snapshots of the Oracle processing as well as a weighted scale for script analysis. The scoring system accounts for the larger impact that, for example, disk reads/writes and executions have on a system compared to the lesser impact of reading/writing to memory. Oracle’s tracking of CPU processing should closely resemble the weighted result produced by the score.

The following example shows you how to run snapshots and then compare the data. The files created will be saved in your ccluserdir by default. If you do not specify a file extension for the snapshot file or the analysis file, the default extension will be .DAT; however, you can specify a file extension, and we recommend using .CSV. For simplicity, the filenames in our example are generic, but it is beneficial to include the domain name and a date/time stamp in each file created to avoid confusion.

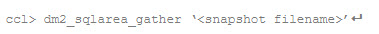

The format of the snapshot command is:

Two snapshots are required to perform an analysis. The format of the analyze command is:

The analyze command will give you a .DAT file (unless you specify a .CSV extension for the snap_analysis_file name) containing the following columns (note: your Millennium code level may impact columns): SCRIPT_OBJECT, TYPE, START_DATE, END_DATE, TIME_IN_MINUTES, PERCENT, SCORE, BUFFER_GETS, DISK_READS, ROWS_PROCESSED, EXECUTIONS, BUFFER_RATIO, DISK_RATIO, EXECUTIONS_PER_MIN, CPU_TIME, MAX_SHARABLE_MEM, OUTLINE_CATEGORY, OPTIMIZER_MODE, HASH, FIRST_LOAD_TIME, SQL.

If you are proficient in script analysis, feel free to utilize these commands. (Because the analyze command is comparing elements that are common between the two snapshot files, we recommend using snapshots that are no more than eight hours apart.) For the less proficient, I will guide you through script analysis over the next few weeks. My topics will include:

- Analyzing scripts that have a high impact each time they run.

- Analyzing scripts that create a high impact due to the number of times they run.

- Automating the collection of script analysis data.

Prognosis: The execution of scripts is necessary for Millennium to function. Analyzing their execution will allow you to correct any negative impacts on overall system performance, thus creating a more consistent end-user experience.