If you’re preparing to upgrade to Millennium 2007.18, 2007.19 or 2010.01, I offer the following 14 recommendations to help minimize the known risks to your production environment. If you have already upgraded, you may want to review these items and see how many of them you can do to improve your current stability. They apply whether you are on AIX, HP-UX or VMS (although VMS applies only through the 2007.19 upgrade since Cerner doesn’t support this platform on 2010.01 and above).

1. The hidden MQ queue. Set the MaxDepth of the CERN.ADMIN.REPLY queue to 15,000 if you are a two-node configuration and to at least 20,000 if you have more than two nodes on the application side of Millennium. Without this change, the queue will fill up and cause nodal MQ stability problems, and you will have an extremely high probability of experiencing one or more significant production issues after the upgrade.

To clear the CERN.ADMIN.REPLY queue in uptime, you have to execute some steps that might not make a lot of sense. Typically, to delete all the messages/transactions from a queue, you simply go into Panther’s Queues control, right-click on the queue name, and select Clear Queue.Unfortunately, this method does not work with the CERN.ADMIN.REPLY queue because the QCP server (SCP Entry ID 27, Queue Control Panel) maintains connection with the queue. You, therefore, need to go into Panther’s Queues control, select the CERN.ADMIN.REPLY queue, right-click it, select View Messages, select all of the messages, click the Delete button, and, finally, verify the deletion.

If you do not have Panther and are using QCPView either from the back end or front end, you cannot perform a “purge admin.reply-all” command to clear the reply queue. Instead, you need to type the following commands:

- show queue admin.reply (to get the current depth)

- dir admin.reply

- purge admin.reply 1 – (the current depth number, such as 14594) (Please be patient. This can take several seconds or more.)

- Run step 1 again to verify that there are no more messages/transactions in the queue. If there are, redo steps 2 and 3 until the queue is empty.

- exit

2. Java server stress. SCP Entry ID 352 (Clinical Information) has five Java services running in a single Java Virtual Machine (JVM). From what I have seen, this is too many Java services in a single JVM. I recommend you split SCP 352 into two SCP Entry IDs. The parameters section of Clinical Information typically looks like this: messaging clinical_event messagingAsync FamilyHistory documentation. I suggest copying 352 to 376 (or the next available SCP Entry ID) and simply leaving 352 with clinical_event, FamilyHistory and documentation in the parameters field. Give 376 a description such as Clinical Info Messaging with the following two entries in the parameters field: messaging and messagingAsync.

The other Java tuning recommended for your system by CMSS or whichever upgrade guide you are using should be done to both SCP entries. If you avoid this tuning, you are likely to experience backlogs in MQ’s messagingAsync queue, which will eventually back up the Java server (SCP 352) and slow down the clinical_event, FamilyHistory, documentation and messaging services so much that clinicians will find Millennium unusable. The messagingAsync MQ backlog will make the physician’s Inbox so slow that it too becomes unusable.

3. Citrix crashes. You may experience an increase in crashes after the upgrade. To determine how many crashes are normal for you, be sure to use the Millennium crash collector before you upgrade. I would suggest having at least a couple weeks of information from the Lights On Network to determine what normal is for you.

Several clients have had crashes fill up their Citrix server drives, which can have a significant downstream impact on an organization. Watch carefully for how many dump files are created on the Citrix servers.

4. Exception queue transactions. Consider disabling MQ Auto Replay during the upgrade. If transactions are being sent to the MQ Exception queue because of build issues or other environmental issues, they are likely to fail again when Panther replays them, adding more strain to MQ. Once all build issues and environmental issues are resolved, turn the MQ Auto Replay back on.

5. Message log disk I/O increases. Error log writing often increases after an upgrade, so I suggest you determine your current wrap times. Which servers have mlg files wrapping in less than a day? Which servers have mlg files wrapping in less than 10 days? If you know what normal is before the upgrade, you can tell what is not normal after the upgrade and can log points to resolve your issues.

6. Confusing MQ log files. Monitor MQ .FDC and log files in /var/mqm/errors on each application node. For some clients, we have found .FDC files that were months and years old. These add confusion when there are MQ and throughput issues. If an .FDC file is more than two weeks old and you are not actively engaging your support team to resolve the issue, it is just consuming disk space.

7. Extra message log writing. We have seen several servers start logging unnecessary messages for certain events after upgrades. I suggest you consider putting the following events in messages.suppress in $cer_mgr:

- SRV_UnpackFailure. Because of the potential risk for troubleshooting SCP Entry ID 330 (PM Reg), you may want to verify that there are no PM Reg server issues before suppressing this event.

- CRM_ClientTimeZone.

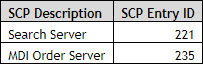

8. Too much extraneous logging. Set the following servers to LogLevel 0 to reduce extraneous logging.

9. MQ information loss. Set the following servers to LogLevel 1. Otherwise, you will miss MQ issues these servers can experience.

10. Missing or incorrect security settings for a file. Please verify that the /cerner/mgr/workflow-orders-definitions.xml exists (or for VMS clients, set def cer_mgr). Verify the ownership and permissions on the file as well.

11. MQ running in mixed mode. I would not run an MQ 6 client with an MQ 5.x back end. Upgrade the application nodes to MQ 6 when Millennium installs the MQ 6 client. It may involve some downtime, but it’s worth the scheduled interruption in order to produce a stable production environment.

12. Request class routing needs. The script PM_SCH_GET_PERSONS should be request class routed to a CPM Script server clone whose kill-time property is 5 minutes. Additional instances of thie server you move this request to, will probably be needed for the go-live volumes.

13. Wrapping issues with message logs. Increase the mlg max records for cmb_0000, cmb_0027, cmb_0029, cmb_0033 and mqalert to at least 16,384. Please verify that you have enough file system space in $cer_log before increasing these. You will want the information held in these files to assist in troubleshooting post-upgrade issues, especially if you upgraded MQ as well as Millennium.

14. QCP server instances. Have you heard the urban myth that claims you can only have one instance of the QCP server (SCP Entry ID 27) running on an application node? Don’t believe it. QCP is simply used by various servers and client applications to read and update information from the local MQ queue manager. It is a shared service and as such works great if multiple instances are running. It can be thought of as SCP Entry ID 51 CPM Script, which if it queues, you add another instance until it no longer queues. Please run enough instances so it does not queue.